Event cameras offer unparalleled advantages for real-time perception in dynamic environments,

thanks to their microsecond-level temporal resolution and asynchronous operation.

Existing event-based object detection methods,

however, are limited by fixed-frequency paradigms and fail to fully exploit the high-temporal resolution and adaptability of event cameras.

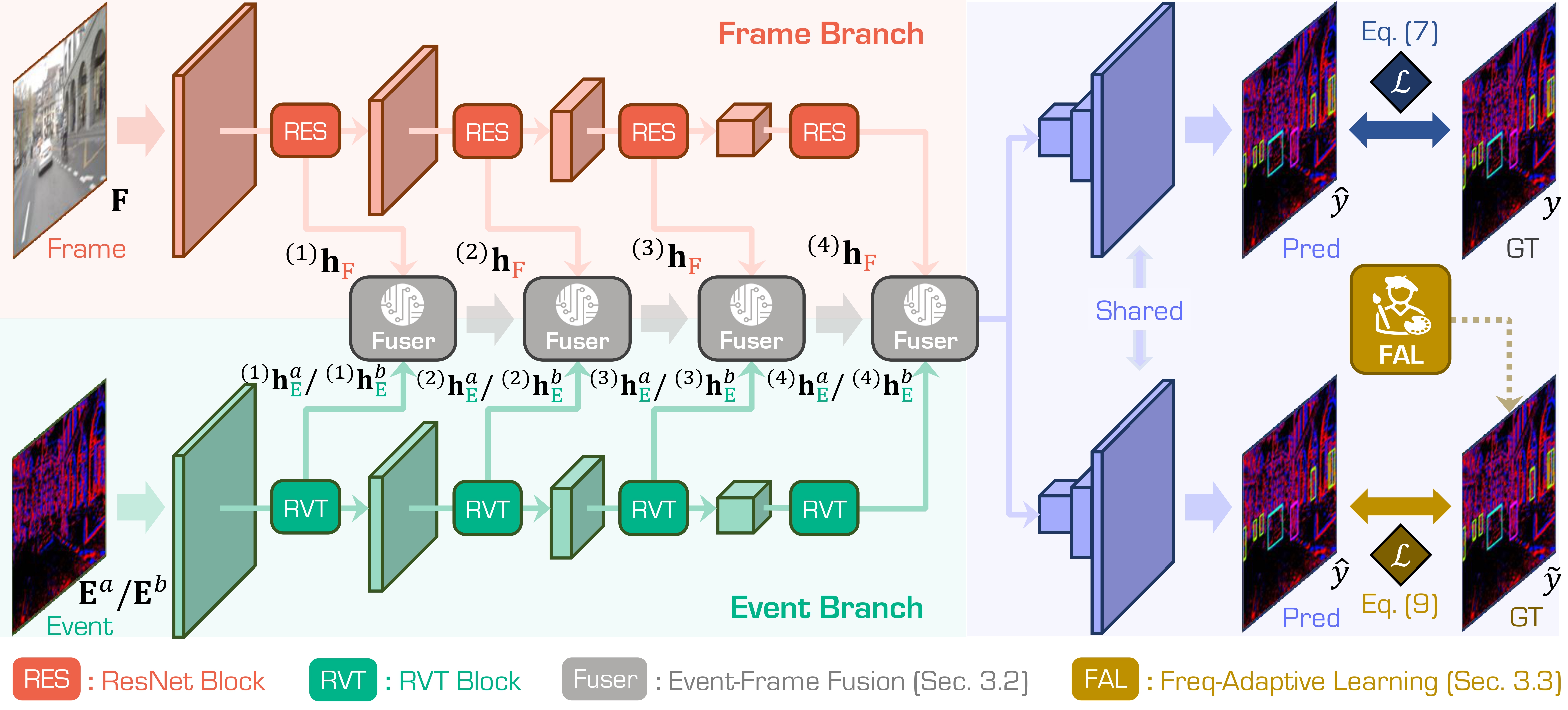

To address these limitations, we propose FlexEvent,

a novel event camera object detection framework that enables detection at arbitrary frequencies.

FlexEvent consists of two key components: FlexFuser, an adaptive event-frame fusion module that integrates high-frequency event data with rich semantic information from RGB frames,

and FAL, a frequency-adaptive learning mechanism that generates frequency-adjusted labels to enhance model generalization across varying operational frequencies.

This combination allows FlexEvent to detect objects with high accuracy in both fast-moving and static scenarios, while adapting to dynamic environments.

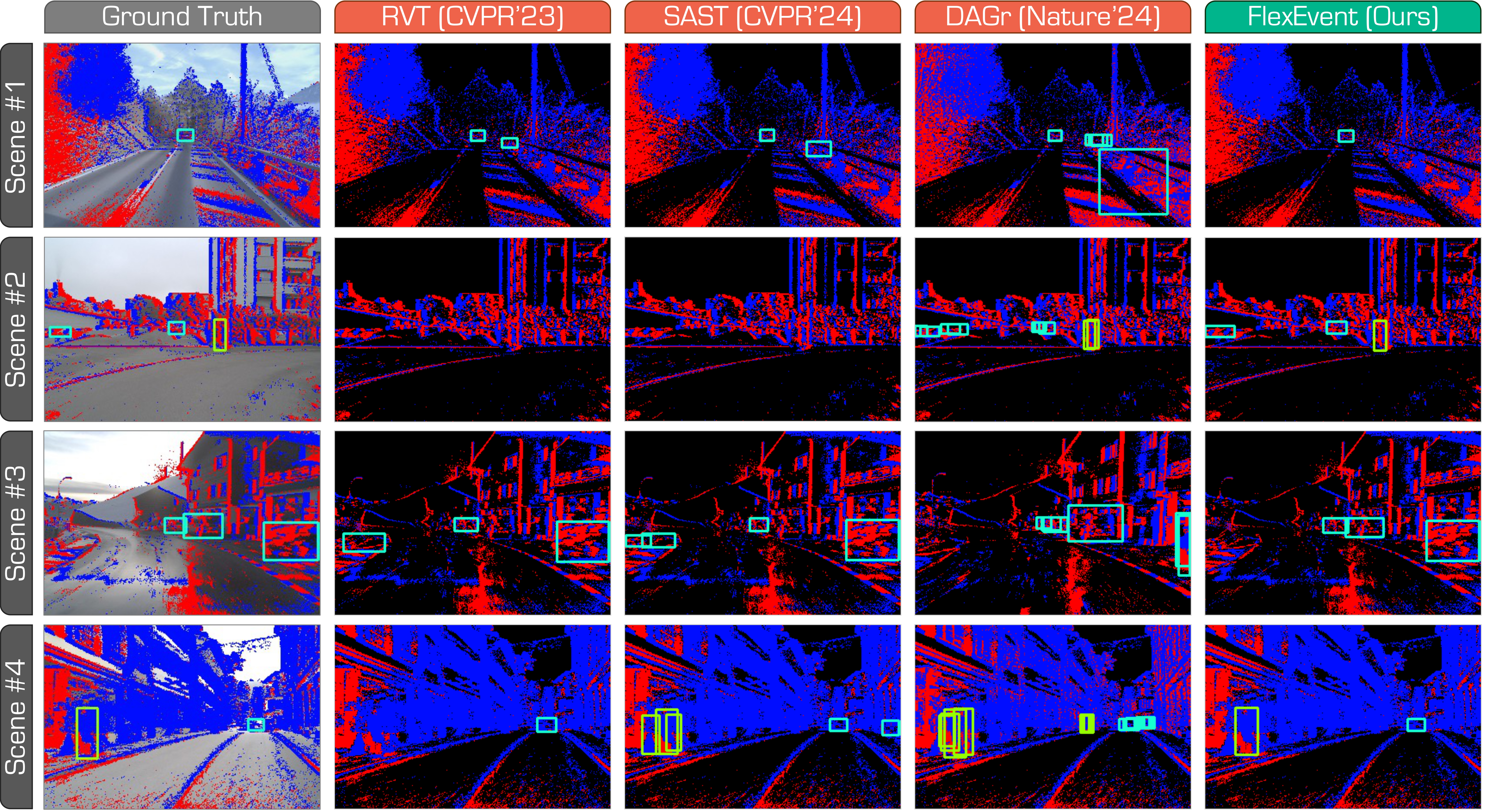

Extensive experiments on large-scale event camera datasets demonstrate that our approach surpasses state-of-the-art methods, achieving significant improvements in both standard and high-frequency settings.

Notably, FlexEvent maintains robust performance when scaling from 20Hz to 90Hz and delivers accurate detection up to 180Hz, proving its effectiveness in extreme conditions.

Our framework sets a new benchmark for event-based object detection and paves the way for more adaptable, real-time vision systems.